Engineering

Powering Real-Time Decisions with PhonePe’s Garfield Platform and Apache Druid

Nitish Goyal04 November, 2025

At PhonePe’s scale, handling millions of transactions and user interactions every hour requires an analytics platform that’s not just fast, but instant. When every millisecond counts — both for user experience and incident detection — batch processing simply doesn’t cut it.

Enter Garfield, PhonePe’s custom-built, high-throughput event analytics platform, engineered around the power of Apache Druid. Garfield is the engine that transforms raw user events into actionable, real-time insights, enabling product, engineering, and business teams to monitor performance and understand user journeys as they unfold.

Scaling Insights: The Garfield Platform at a Glance

The Garfield platform is built to handle extreme scale and velocity, processing approximately 500+ million messages per day, with a peak ingestion rate of around 40,000 messages per second. This capacity is achieved through optimized architecture where we employ client-side sampling to manage the raw data volume efficiently, ensuring only the most representative events enter the pipeline.

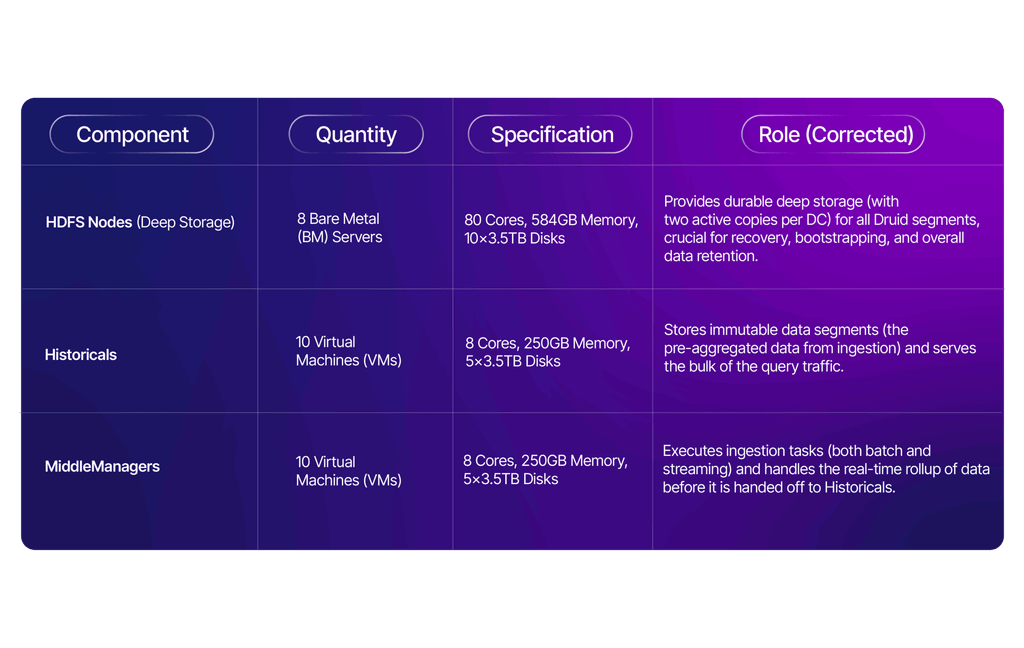

The Druid Cluster Powering Garfield

Our performance is underpinned by a robust, dedicated Druid cluster

Real-Time Aggregation and Cluster Tuning

While the Garfield service preps and isolates the data (separate Kafka topic for each client), the heavy lifting of summarization happens downstream. The performance of this process is heavily reliant on fine-tuned Druid configurations.

Data Rollup (our target is 5:1) occurs at the Druid ingestion layer, where nodes are configured for efficiency: segments are capped at 1 million maximum rows to optimize segment size and parallelism. Data is quickly written from memory (max 100,000 rows in memory) and compressed using LZ4 compression for faster decompression during query time.

For our largest clients, segment granularity is set to 1 day with a query granularity of 30 minutes to enable high-resolution trend analysis. Furthermore, we leverage advanced Druid metrics like ThetaSketch on the sessionId field for accurate distinct counting and QuantilesDoublesSketch on remaining fields for efficient calculation of percentiles.

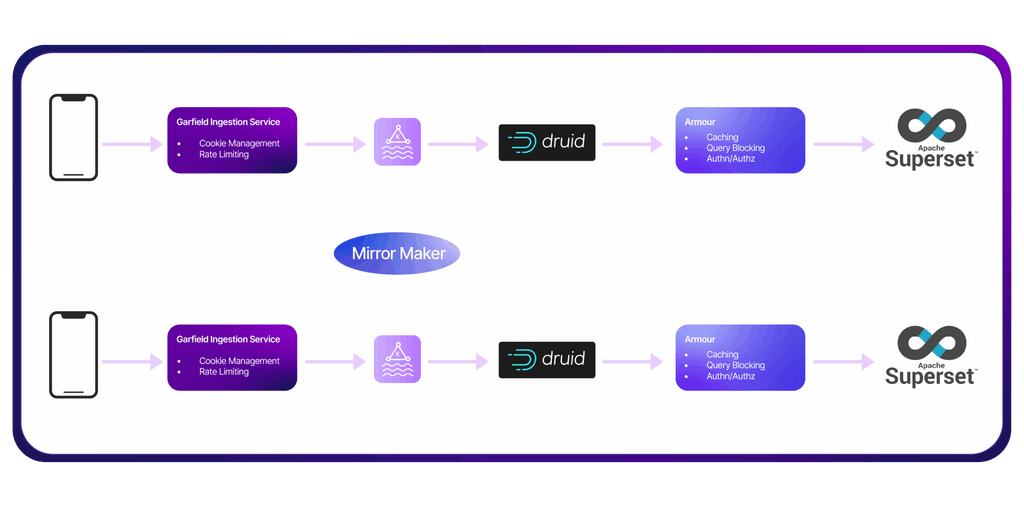

Geo-Redundancy: Active-Active Data Centers

To ensure high availability and disaster recovery, Garfield operates in an Active-Active setup across two data centers (DCs).

- Intra-DC Redundancy: Within each data center, we maintain two active copies of the data in HDFS, providing immediate local redundancy.

- Cross-Region Replication: Data is replicated asynchronously between the two geographic regions using Kafka Mirror Maker. This ensures that the event streams are consistently available in both DCs, where they are then independently replayed into the local Druid clusters for query serving.

This architecture ensures maximum resilience and allows either data center to serve analytics traffic seamlessly.

The Engine Room: Garfield Ingestion Intelligence

The core strength of our platform lies in the Garfield Event Ingestion Service, a robust layer designed to manage, clean, and enrich the massive incoming streams before they hit Kafka. This service captures events from applications deployed both out of the network (mobile apps/web) and inside the network (backend services).

Crucial functions performed by the Garfield Ingestion Service:

- Schema Validation: Every incoming event is strictly validated against its defined schema. This prevents “garbage” data from contaminating our analytics platform.

- Service Rate Limiting: As a tenanted system, Garfield manages a soft rate limiter on the ingestion pipeline itself, which protects the platform from traffic spikes.

Cookie Management: The service actively manages cookie data for accurate session count and unique user analysis, enriching the events before they proceed.

Garfield’s Core Use Cases: Actionable Insights

Garfield transforms these aggregated event streams into three core areas of value:

- Real-Time Operational Monitoring: Tracking high-volume system and performance events (API latency, error rates) for immediate incident triage and system stability assurance.

- Deep Product & Customer Journey Analytics: Analyzing user interaction and journey events for funnel optimization, A/B test result evaluation, and tracking feature adoption to drive product refinement.

Security and Business Risk: Utilizing behavioral and transactional event patterns for real-time fraud pattern detection and security monitoring.

Data Governance and Cluster Scalability

Scaling and Standardizing with Strict Dimensionality

To maintain scalability across a diverse client base, we enforce strict data governance:

- Dimensionality Control: We impose a hard control on event complexity, limiting any single event to a maximum of 20 custom dimensions (tags). The key to controlling cardinality and maintaining high query speeds.

- Common Dimensions: In addition to these 20 client-managed tags, there are a few additional common dimensions shared across all clients. These standardized fields are used to plot high-level trends and facilitate cross-client comparisons, while the custom tags enable client-specific use cases.

- Platform Agnostic Model: The combination of common dimensions and client-specific tags ensures the core ingestion and querying model remains client-agnostic and highly scalable.

Query Protection via Armor

Data is visualized primarily through Superset, but all query traffic is routed through Armor, our custom proxy layer.

Superset⟶Armor⟶Druid

Armor acts as a defensive shield for the Druid cluster, handling crucial non-analytic functions:

- Query Governance: Intelligently analyzing and blocking expensive or rogue queries that could destabilize the cluster.

- Authentication and Authorization (Authn/Authz): Securing access to data.

- Caching: Accelerating frequently executed dashboard queries.

By combining the low-latency processing of the Garfield Ingestion Service, the power of Apache Druid’s rollup, and the robust governance of Armor, PhonePe has built an analytics platform capable of driving instantaneous, data-driven decisions at a continental scale.